41 nlnl negative learning for noisy labels

NLNL Negative Learning For Noisy Labels - Open Source Agenda @inproceedings{kim2019nlnl, title={Nlnl: Negative learning for noisy labels}, author={Kim, Youngdong and Yim, Junho and Yun, Juseung and Kim, Junmo}, booktitle={Proceedings of the IEEE International Conference on Computer Vision}, pages={101--110}, year={2019} } Open Source Agenda is not affiliated with "NLNL Negative Learning For Noisy Labels ... GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A ... 2019-ICCV - NLNL: Negative Learning for Noisy Labels. 2019-ICCV - Symmetric Cross Entropy for Robust Learning With Noisy Labels. 2019-ICCV - Co-Mining: Deep Face Recognition With Noisy Labels. 2019-ICCV - O2U-Net: A Simple Noisy Label Detection Approach for Deep Neural Networks.

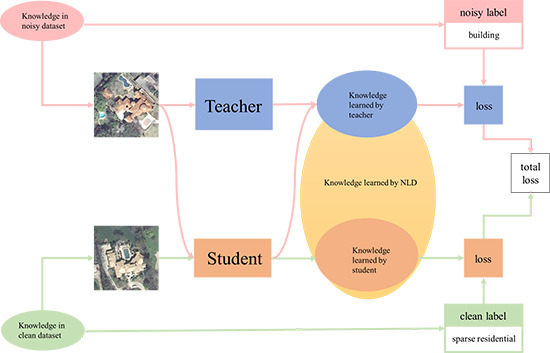

Joint Negative and Positive Learning for Noisy Labels Joint Negative and Positive Learning for Noisy Labels Youngdong Kim, Juseung Yun, Hyounguk Shon, Junmo Kim Training of Convolutional Neural Networks (CNNs) with data with noisy labels is known to be a challenge.

Nlnl negative learning for noisy labels

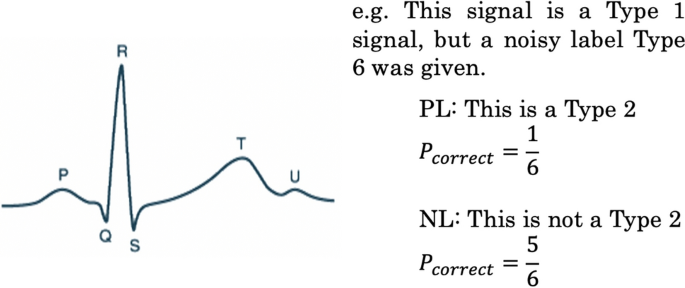

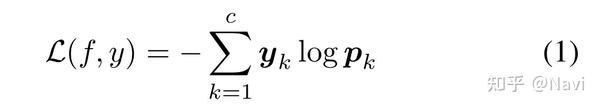

Joint Negative and Positive Learning for Noisy Labels | DeepAI NL [kim2019nlnl] is an indirect learning method for training CNNs with noisy data. Instead of using given labels, it chooses random complementary label ¯¯y and train CNNs as in "input image does not belong to this complementary label." The loss function following this definition is as below, along with the classic PL loss function for comparison: ICCV 2019 Open Access Repository Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL). [1908.07387v1] NLNL: Negative Learning for Noisy Labels [Submitted on 19 Aug 2019] NLNL: Negative Learning for Noisy Labels Youngdong Kim, Junho Yim, Juseung Yun, Junmo Kim Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification.

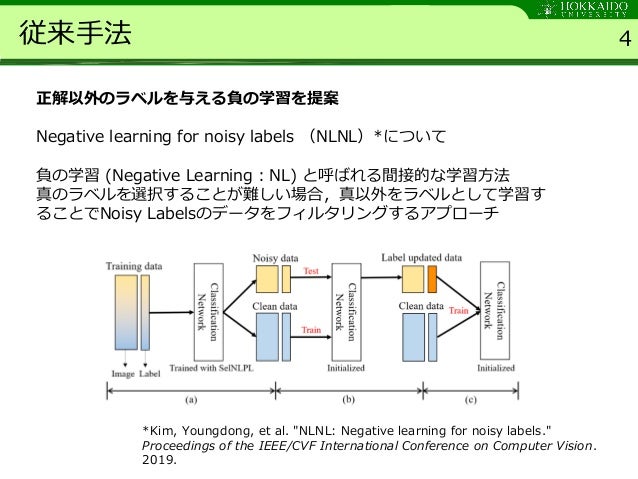

Nlnl negative learning for noisy labels. ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels - GitHub NLNL: Negative Learning for Noisy Labels. Contribute to ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels development by creating an account on GitHub. CiteSeerX — A.: Learning with noisy labels Moreover, random label noise is class-conditional — the flip probability depends on the class. We provide two approaches to suitably modify any given surrogate loss function. First, we provide a simple unbiased estimator of any loss, and ob-tain performance bounds for empirical risk minimization in the presence of iid data with noisy labels. NLNL: Negative Learning for Noisy Labels - 百度学术 NLNL: Negative Learning for Noisy Labels. Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in "input image belongs to this label" (Positive Learning; PL), which is a fast and accurate method if the labels ... NLNL: Negative Learning for Noisy Labels - NASA/ADS Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

NLNL: Negative Learning for Noisy Labels (ICCV2019)-pudn.com 作者将这种方法称为 N L ( N e g a t i v e L e a r n i n g ) NL (Negative\ Learning) N L(N egative Learning) 即负向学习。. 式 ( 2 ) (2) (2) 将互补标签的概率值优化为零,从而使其他类的概率值增加,达到了 N L NL N L 的目的。. 利用上述两种方法 ( P L PL P L 和 N L NL N L )分别训练一个 ... 《NLNL: Negative Learning for Noisy Labels》论文解读 - 知乎 0x01 Introduction最近在做数据筛选方面的项目,看了些噪声方面的论文,今天就讲讲之前看到的一篇发表于ICCV2019上的关于Noisy Labels的论文《NLNL: Negative Learning for Noisy Labels》 论文地址: … PDF NLNL: Negative Learning for Noisy Labels trained directly with a given noisy label; thus overfitting to a noisy label can occur even if the pruning or cleaning pro-cess is performed. Meanwhile, we use NL method, which indirectly uses noisy labels, thereby avoiding the problem of memorizing the noisy label and exhibiting remarkable performance in filtering only noisy samples. Using ... Joint Negative and Positive Learning for Noisy Labels monly use the given labels in a direct manner, i.e., "input image belongs to this label" (Positive Learning; PL). This behavior carries the risk of providing faulty information to the CNNs when noisy labels are involved. Motivated by this reason, Negative Learning for Noisy Labels; NLNL [12], which is an indirect learning method

NLNL: Negative Learning for Noisy Labels - IEEE Computer Society Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in Joint Negative and Positive Learning for Noisy Labels NLNL further employs a three-stage pipeline to improve convergence. As a result, filtering noisy data through the NLNL pipeline is cumbersome, increasing the training cost. In this study, we propose a novel improvement of NLNL, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage. NLNL: Negative Learning for Noisy Labels - CORE Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL). NLNL: Negative Learning for Noisy Labels - Semantic Scholar A novel improvement of NLNL is proposed, named Joint Negative and Positive Learning (JNPL), that unifies the filtering pipeline into a single stage, allowing greater ease of practical use compared to NLNL. 6 Highly Influenced PDF View 5 excerpts, cites methods Decoupling Representation and Classifier for Noisy Label Learning Hui Zhang, Quanming Yao

NLNL: Negative Learning for Noisy Labels - Semantic Scholar Figure 1: Conceptual comparison between Positive Learning (PL) and Negative Learning (NL). Regarding noisy data, while PL provides CNN the wrong information (red balloon), with a higher chance, NL can provide CNN the correct information (blue balloon) because a dog is clearly not a bird. - "NLNL: Negative Learning for Noisy Labels"

PDF Negative Learning for Noisy Labels - UCF CRCV Label Correction Correct Directly Re-Weight Backwards Loss Correction Forward Loss Correction Sample Pruning Suggested Solution - Negative Learning Proposed Solution Utilizing the proposed NL Selective Negative Learning and Positive Learning (SelNLPL) for filtering Semi-supervised learning Architecture

NLNL: Negative Learning for Noisy Labels - IEEE Xplore To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this complementary label.''

NLNL: Negative Learning for Noisy Labels | DeepAI learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this complementary label." Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect

NLNL: Negative Learning for Noisy Labels | Papers With Code Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

NLNL: Negative Learning for Noisy Labels - CORE Reader NLNL: Negative Learning for Noisy Labels - CORE Reader

NLNL: Negative Learning for Noisy Labels | Request PDF - ResearchGate Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our...

NLNL: Negative Learning for Noisy Labels: Paper and Code NLNL: Negative Learning for Noisy Labels. Click To Get Model/Code. Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in "input image belongs to this label" (Positive Learning; PL), which is a fast and accurate method if the labels are assigned correctly to ...

NLNL: Negative Learning for Noisy Labels | Request PDF The work in [19] automatically generated complementary labels from the given noisy labels and utilized them for the proposed negative learning, incorporating the complementary labeling...

NLNL-Negative-Learning-for-Noisy-Labels/main_NL.py at master ... NLNL: Negative Learning for Noisy Labels. Contribute to ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels development by creating an account on GitHub.

Joint Negative and Positive Learning for Noisy Labels - SlideShare 従来手法 4 正解以外のラベルを与える負の学習を提案 Negative learning for noisy labels (NLNL)*について 負の学習 (Negative Learning:NL) と呼ばれる間接的な学習方法 真のラベルを選択することが難しい場合,真以外をラベルとして学習す ることでNoisy Labelsのデータを ...

[1908.07387v1] NLNL: Negative Learning for Noisy Labels [Submitted on 19 Aug 2019] NLNL: Negative Learning for Noisy Labels Youngdong Kim, Junho Yim, Juseung Yun, Junmo Kim Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification.

ICCV 2019 Open Access Repository Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL).

Joint Negative and Positive Learning for Noisy Labels | DeepAI NL [kim2019nlnl] is an indirect learning method for training CNNs with noisy data. Instead of using given labels, it chooses random complementary label ¯¯y and train CNNs as in "input image does not belong to this complementary label." The loss function following this definition is as below, along with the classic PL loss function for comparison:

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/3-Figure2-1.png)

![2021 CVPR] Joint Negative and Positive Learning for Noisy ...](https://i.ytimg.com/vi/1oSExxg9txY/hqdefault.jpg)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/6-Table2-1.png)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/7-Table3-1.png)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/4-Figure3-1.png)

Post a Comment for "41 nlnl negative learning for noisy labels"